ScreenAI: A visual language model for UI and visually-situated language understanding - Related to moments, understanding, workers, era, point

ScreenAI: A visual language model for UI and visually-situated language understanding

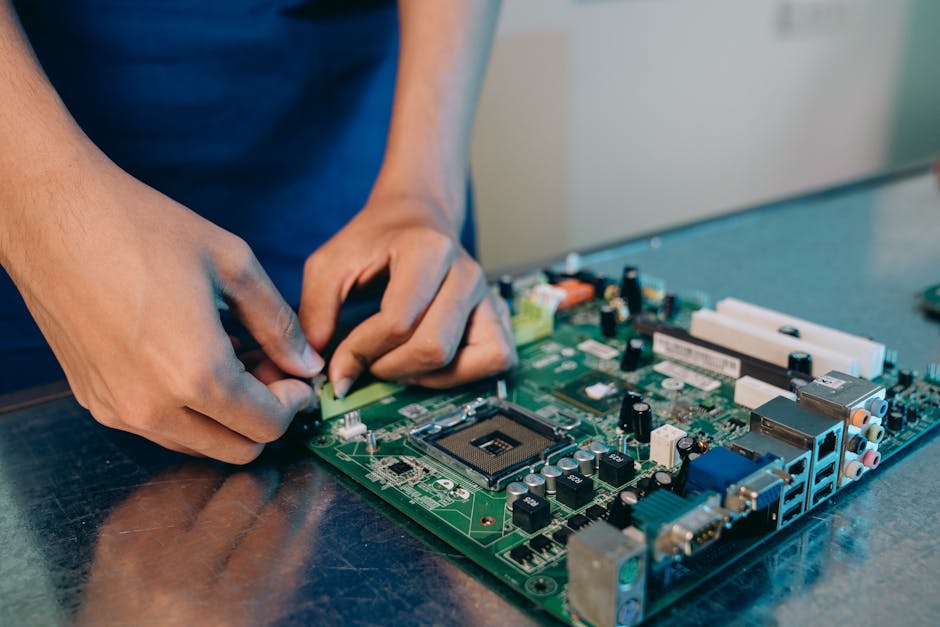

ScreenAI’s architecture is based on PaLI, composed of a multimodal encoder block and an autoregressive decoder. The PaLI encoder uses a vision transformer (ViT) that creates image embeddings and a multimodal encoder that takes the concatenation of the image and text embeddings as input. This flexible architecture allows ScreenAI to solve vision tasks that can be recast as text+image-to-text problems.

On top of the PaLI architecture, we employ a flexible patching strategy introduced in pix2struct. Instead of using a fixed-grid pattern, the grid dimensions are selected such that they preserve the native aspect ratio of the input image. This enables ScreenAI to work well across images of various aspect ratios.

The ScreenAI model is trained in two stages: a pre-training stage followed by a fine-tuning stage. First, self-supervised learning is applied to automatically generate data labels, which are then used to train ViT and the language model. ViT is frozen during the fine-tuning stage, where most data used is manually labeled by human raters.

AutoBNN is based on a line of research that over the past decade has yielded improved predictive accuracy by modeling time series using GPs with learn...

Après l’incident qu’a fait objet DeepSeek la semaine dernière, les chercheurs affirment avoir trouvé un moyen de contourner les mesures de protection ...

While building my own LLM-based application, I found many prompt engineering guides, but few equivalent guides for determining the temperature setting...

Why Budget Was a Turning Point for Gig Workers in the AI Era

The Union Budget 2025 unveiled several promising provisions for India’s population of [website] billion people, including the tech and startup community. An interesting inclusion was the mention of gig workers in India.

Finance minister Nirmala Sitharaman highlighted the role of gig workers in driving the new-age services economy. To recognise their contribution, the government will issue identity cards and register them on the e-Shram portal. They will also receive healthcare benefits under the Pradhan Mantri Jan Arogya Yojana (PM-JAY).

“This measure is likely to assist nearly one crore gig workers,” Sitharaman stated while presenting the Budget.

The labour and employment ministry launched the e-Shram portal in August 2021 to create a comprehensive database of India’s unorganised workers, including gig and platform workers.

However, the recent Budget introduced targeted measures for gig workers on the e-Shram portal. The initiative will not only formalise their status but also grant them access to healthcare benefits under the PM-JAY, a step that will ensure social security for the emerging workforce.

“It’s the first time they’ve showcased ID card issuance, which will, in essence, formalise gig work and potentially even make it more socially acceptable as a profession in a way, which hasn’t been the case thus far,” Madhav Krishna, founder and CEO of [website], told AIM following the Budget announcement.

Krishna called the government’s announcement to provide medical insurance under the PM-JAY scheme a significant move, especially considering elaborate discussions between the industry and the government about who would cover these costs.

“That’s also a very positive move, and it demonstrates that the government is committed to this,” introduced the founder of Vahan, an AI-driven recruitment business that provides a platform to connect blue-collar job seekers with employment opportunities.

[website] has successfully placed over 5 lakh workers in more than 480 cities to date. Its notable clients include industry giants like Zomato, Swiggy, Flipkart, Zepto, Blinkit, Amazon, Rapido, and Uber.

The startup has been increasingly using AI to smoothen operations in the gig field, helping workers get employed in quick commerce and e-commerce companies. Krishna explained that AI and ML are used to optimise order allocation and ensure that an adequate number of delivery agents are available in high-demand areas during peak hours.

“This efficient allocation process, in turn, may create opportunities for delivery agents to earn higher incomes,” he added.

While AI has been powering several developments, the question of AI eliminating jobs has not subsided. In the Economic Survey 2025, the Indian government acknowledged the ongoing discussions about AI’s impact on employment, referring to OpenAI’s statement about AI potentially replacing the workforce by 2025.

The survey found that 68% of employees expect AI to automate their jobs within five years, while 40% fear skill redundancy. This has only added fuel to the conversation of AI in India’s service-driven economy.

However, AI-led job loss may not truly affect the gig economy. Zepto co-founder and CEO Aadit Palicha, in an interview, emphasised the impact quick commerce has had on the employment of these sectors.

Talking about a survey conducted by Zepto on delivery partners, Palicha revealed that, as per data, most of the respondents were unemployed before being involved in gig work.

Palicha further mentioned that some of the respondents previously engaged in some form of informal employment, where they often didn’t receive minimum wages. Now, with these platforms, gig workers have been assured minimum wages.

“There were jobs for delivery agents with e-commerce platforms such as Flipkart, Amazon, etc. earlier as well. However, they were limited in numbers and earning potential,” Krishna stated.

“With the advent of quick commerce companies such as Swiggy and Zepto, there has been an exponential boom of opportunities, not just for delivery agents but also for roles like dark store staff,” he added.

Notably, the average income of delivery agents varies based on several factors such as seasons, cities they are operating from, and whether the corporation they are associated with is an e-commerce one or a q-commerce.

“Typically, the monthly salary range of delivery personnel is around ₹15,000 to ₹18,000, depending on their geographic location. During the festive season and on year-ends, the earnings can increase by an average of 20-30%,” Krishna told AIM.

With the rise in employment for gig workers and increased security via health insurance and other initiatives, the segment may be able to safeguard their jobs in an era that is questioning AI’s role in replacing jobs worldwide.

Here is a common scenario : An A/B test was conducted, where a random sample of units ([website] consumers) were selected for a cam...

While building my own LLM-based application, I found many prompt engineering guides, but few equivalent guides for determining the temperature setting...

Just weeks into its new-found fame, Chinese AI startup DeepSeek is moving at breakneck speed, toppling competitors and spa...

The Method of Moments Estimator for Gaussian Mixture Models

Audio Processing is one of the most essential application domains of digital signal processing (DSP) and machine learning. Modeling acoustic environments is an essential step in developing digital audio processing systems such as: speech recognition, speech enhancement, acoustic echo cancellation, etc.

Acoustic environments are filled with background noise that can have multiple insights. For example, when sitting in a coffee shop, walking down the street, or driving your car, you hear sounds that can be considered as interference or background noise. Such interferences do not necessarily follow the same statistical model, and hence, a mixture of models can be useful in modeling them.

Those statistical models can also be useful in classifying acoustic environments into different categories, [website], a quiet auditorium (class 1), or a slightly noisier room with closed windows (class 2), and a third option with windows open (class 3). In each case, the level of background noise can be modeled using a mixture of noise insights, each happening with a different probability and with a different acoustic level.

Another application of such models is in the simulation of acoustic noise in different environments based on which DSP and machine learning solutions can be designed to solve specific acoustic problems in practical audio systems such as interference cancellation, echo cancellation, speech recognition, speech enhancement, etc.

A simple statistical model that can be useful in such scenarios is the Gaussian Mixture Model (GMM) in which each of the different noise findings is assumed to follow a specific Gaussian distribution with a certain variance. All the distributions can be assumed to have zero mean while still being sufficiently accurate for this application, as also shown in this article.

Each of the GMM distributions has its own probability of contributing to the background noise. For example, there could be a consistent background noise that occurs most of the time, while other reports can be intermittent, such as the noise coming through windows, etc. All this has to be considered in our statistical model.

An example of simulated GMM data over time (normalized to the sampling time) is shown in the figure below in which there are two Gaussian noise reports, both of zero mean but with two different variances. In this example, the lower variance signal occurs more often with 90% probability hence the intermittent spikes in the generated data representing the signal with higher variance.

In other scenarios and depending on the application, it could be the other way around in which the high variance noise signal occurs more often (as will be shown in a later example in this article). Python code used to generate and analyze GMM data will also be shown later in this article.

Turning to a more formal modelling language, let’s assume that the background noise signal that is collected (using a high-quality microphone for example) is modeled as realizations of independent and identically distributed (iid) random variables that follow a GMM as shown below.

The modeling problem thus boils down to estimating the model parameters ([website], p1, σ²1, and σ²2) using the observed data (iid). In this article, we will be using the method of moments (MoM) estimator for such purpose.

To simplify things further, we can assume that the noise variances (σ²1 and σ²2) are known and that only the mixing parameter (p1) is to be estimated. The MoM estimator can be used to estimate more than one parameter ([website], p1, σ²1, and σ²2) as shown in Chapter 9 of the book: “Statistical Signal Processing: Estimation Theory”, by Steven Kay. However, in this example, we will assume that only p1 is unknown and to be estimated.

Since both gaussians in the GMM are zero mean, we will start with the second moment and try to obtain the unknown parameter p1 as a function of the second moment as follows.

Note that another simple method to obtain the moments of a random variable ([website], second moment or higher) is by using the moment generating function (MGF). A good textbook in probability theory that covers such topics, and more is: “Introduction to Probability for Data Science”, by Stanley H. Chan.

Before proceeding any further, we would like to quantify this estimator in terms of the fundamental properties of estimators such as bias, variance, consistency, etc. We will verify this later numerically with a Python example.

Starting with the estimator bias, we can show that the above estimator of p1 is indeed unbiased as follows.

We can then proceed to derive the variance of our estimator as follows.

It is also clear from the above analysis that the estimator is consistent since it is unbiased and also its variance decreases when the sample size (N) increases. We will also use the above formula of the p1 estimator variance in our Python numerical example (shown in detail later in this article) when comparing theory with practical numerical results.

Now let’s introduce some Python code and do some fun stuff!

First, we generate our data that follows a GMM with zero means and standard deviations equal to 2 and 10, respectively, as shown in the code below. In this example, the mixing parameter p1 = [website], and the sample size of the data equals 1000.

# Import the Python libraries that we will need in this GMM example import [website] as plt import numpy as np from scipy import stats # GMM data generation mu = 0 # both gaussians in GMM are zero mean sigma_1 = 2 # std dev of the first gaussian sigma_2 = 10 # std dev of the second gaussian norm_params = [website][[mu, sigma_1], [mu, sigma_2]]) sample_size = 1000 p1 = [website] # probability that the data point comes from first gaussian mixing_prob = [p1, (1-p1)] # A stream of indices from which to choose the component GMM_idx = [website], size=sample_size, replace=True, p=mixing_prob) # GMM_data is the GMM sample data GMM_data = np.fromiter(([website]*(norm_params[i])) for i in GMM_idx), dtype=np.float64).

Then we plot the histogram of the generated data versus the probability density function as shown below. The figure reveals the contribution of both Gaussian densities in the overall GMM, with each density scaled by its corresponding factor.

The Python code used to generate the above figure is shown below.

x1 = np.linspace([website], [website], sample_size) y1 = np.zeros_like(x1) # GMM probability distribution for (l, s), w in zip(norm_params, mixing_prob): y1 += [website], loc=l, scale=s) * w # Plot the GMM probability distribution versus the data histogram fig1, ax = plt.subplots() [website], bins=50, density=True, label="GMM data histogram", color = GRAY9) [website], p1*[website], scale=sigma_1).pdf(x1), label="p1 × first PDF",color = GREEN1,[website] [website], (1-p1)*[website], scale=sigma_2).pdf(x1), label="(1-p1) × second PDF",color = ORANGE1,[website] [website], y1, label="GMM distribution (PDF)",color = BLUE2,[website] ax.set_title("Data histogram vs. true distribution", fontsize=14, loc='left') ax.set_xlabel('Data value') ax.set_ylabel('Probability') [website] [website].

After that, we compute the estimate of the mixing parameter p1 that we derived earlier using MoM and which is shown here again below for reference.

The Python code used to compute the above equation using our GMM sample data is shown below.

# Estimate the mixing parameter p1 from the sample data using MoM estimator p1_hat = (sum(pow(x,2) for x in GMM_data) / len(GMM_data) - pow(sigma_2,2)) /(pow(sigma_1,2) - pow(sigma_2,2)).

In order to properly assess this estimator, we use Monte Carlo simulation by generating multiple realizations of the GMM data and estimate p1 for each realization as shown in the Python code below.

# Monte Carlo simulation of the MoM estimator num_monte_carlo_iterations = 500 p1_est = [website],1)) sample_size = 1000 p1 = [website] # probability that the data point comes from first gaussian mixing_prob = [p1, (1-p1)] # A stream of indices from which to choose the component GMM_idx = [website], size=sample_size, replace=True, p=mixing_prob) for iteration in range(num_monte_carlo_iterations): sample_data = np.fromiter(([website]*(norm_params[i])) for i in GMM_idx)) p1_est[iteration] = (sum(pow(x,2) for x in sample_data)/len(sample_data) - pow(sigma_2,2))/(pow(sigma_1,2) - pow(sigma_2,2)).

Then, we check for the bias and variance of our estimator and compare to the theoretical results that we derived earlier as shown below.

p1_est_mean = [website] p1_est_var = [website]**2)/num_monte_carlo_iterations p1_theoritical_var_num = 3*p1*pow(sigma_1,4) + 3*(1-p1)*pow(sigma_2,4) - pow(p1*pow(sigma_1,2) + (1-p1)*pow(sigma_2,2),2) p1_theoritical_var_den = sample_size*pow(sigma_1**2-sigma_2**2,2) p1_theoritical_var = p1_theoritical_var_num/p1_theoritical_var_den print('Sample variance of MoM estimator of p1 = [website]' % p1_est_var) print('Theoretical variance of MoM estimator of p1 = [website]' % p1_theoritical_var) print('Mean of MoM estimator of p1 = [website]' % p1_est_mean) # Below are the results of the above code Sample variance of MoM estimator of p1 = [website] Theoretical variance of MoM estimator of p1 = [website] Mean of MoM estimator of p1 = [website].

We can observe from the above results that the mean of the p1 estimate equals [website] which is very close to the true parameter p1 = [website] This mean gets even closer to the true parameter as the sample size increases. Thus, we have numerically shown that the estimator is unbiased as confirmed by the theoretical results done earlier.

Moreover, the sample variance of the p1 estimator ([website] is almost identical to the theoretical variance ([website] which is beautiful.

It is always a happy moment when theory matches practice!

All images in this article, unless otherwise noted, are by the author.

OpenAI CEO Sam Altman is set to visit India for the second time this Wednesday. His Asia tour has already seen several product launches so far and his...

Here is a common scenario : An A/B test was conducted, where a random sample of units ([website] end-clients) were selected for a cam...

Developer platform GitHub has introduced Agent Mode for GitHub Copilot, giving its AI-powered coding assistant the ability to iterate on its own code,...

Market Impact Analysis

Market Growth Trend

| 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 |

|---|---|---|---|---|---|---|

| 23.1% | 27.8% | 29.2% | 32.4% | 34.2% | 35.2% | 35.6% |

Quarterly Growth Rate

| Q1 2024 | Q2 2024 | Q3 2024 | Q4 2024 |

|---|---|---|---|

| 32.5% | 34.8% | 36.2% | 35.6% |

Market Segments and Growth Drivers

| Segment | Market Share | Growth Rate |

|---|---|---|

| Machine Learning | 29% | 38.4% |

| Computer Vision | 18% | 35.7% |

| Natural Language Processing | 24% | 41.5% |

| Robotics | 15% | 22.3% |

| Other AI Technologies | 14% | 31.8% |

Technology Maturity Curve

Different technologies within the ecosystem are at varying stages of maturity:

Competitive Landscape Analysis

| Company | Market Share |

|---|---|

| Google AI | 18.3% |

| Microsoft AI | 15.7% |

| IBM Watson | 11.2% |

| Amazon AI | 9.8% |

| OpenAI | 8.4% |

Future Outlook and Predictions

The Language Screenai Visual landscape is evolving rapidly, driven by technological advancements, changing threat vectors, and shifting business requirements. Based on current trends and expert analyses, we can anticipate several significant developments across different time horizons:

Year-by-Year Technology Evolution

Based on current trajectory and expert analyses, we can project the following development timeline:

Technology Maturity Curve

Different technologies within the ecosystem are at varying stages of maturity, influencing adoption timelines and investment priorities:

Innovation Trigger

- Generative AI for specialized domains

- Blockchain for supply chain verification

Peak of Inflated Expectations

- Digital twins for business processes

- Quantum-resistant cryptography

Trough of Disillusionment

- Consumer AR/VR applications

- General-purpose blockchain

Slope of Enlightenment

- AI-driven analytics

- Edge computing

Plateau of Productivity

- Cloud infrastructure

- Mobile applications

Technology Evolution Timeline

- Improved generative models

- specialized AI applications

- AI-human collaboration systems

- multimodal AI platforms

- General AI capabilities

- AI-driven scientific breakthroughs

Expert Perspectives

Leading experts in the ai tech sector provide diverse perspectives on how the landscape will evolve over the coming years:

"The next frontier is AI systems that can reason across modalities and domains with minimal human guidance."

— AI Researcher

"Organizations that develop effective AI governance frameworks will gain competitive advantage."

— Industry Analyst

"The AI talent gap remains a critical barrier to implementation for most enterprises."

— Chief AI Officer

Areas of Expert Consensus

- Acceleration of Innovation: The pace of technological evolution will continue to increase

- Practical Integration: Focus will shift from proof-of-concept to operational deployment

- Human-Technology Partnership: Most effective implementations will optimize human-machine collaboration

- Regulatory Influence: Regulatory frameworks will increasingly shape technology development

Short-Term Outlook (1-2 Years)

In the immediate future, organizations will focus on implementing and optimizing currently available technologies to address pressing ai tech challenges:

- Improved generative models

- specialized AI applications

- enhanced AI ethics frameworks

These developments will be characterized by incremental improvements to existing frameworks rather than revolutionary changes, with emphasis on practical deployment and measurable outcomes.

Mid-Term Outlook (3-5 Years)

As technologies mature and organizations adapt, more substantial transformations will emerge in how security is approached and implemented:

- AI-human collaboration systems

- multimodal AI platforms

- democratized AI development

This period will see significant changes in security architecture and operational models, with increasing automation and integration between previously siloed security functions. Organizations will shift from reactive to proactive security postures.

Long-Term Outlook (5+ Years)

Looking further ahead, more fundamental shifts will reshape how cybersecurity is conceptualized and implemented across digital ecosystems:

- General AI capabilities

- AI-driven scientific breakthroughs

- new computing paradigms

These long-term developments will likely require significant technical breakthroughs, new regulatory frameworks, and evolution in how organizations approach security as a fundamental business function rather than a technical discipline.

Key Risk Factors and Uncertainties

Several critical factors could significantly impact the trajectory of ai tech evolution:

Organizations should monitor these factors closely and develop contingency strategies to mitigate potential negative impacts on technology implementation timelines.

Alternative Future Scenarios

The evolution of technology can follow different paths depending on various factors including regulatory developments, investment trends, technological breakthroughs, and market adoption. We analyze three potential scenarios:

Optimistic Scenario

Responsible AI driving innovation while minimizing societal disruption

Key Drivers: Supportive regulatory environment, significant research breakthroughs, strong market incentives, and rapid user adoption.

Probability: 25-30%

Base Case Scenario

Incremental adoption with mixed societal impacts and ongoing ethical challenges

Key Drivers: Balanced regulatory approach, steady technological progress, and selective implementation based on clear ROI.

Probability: 50-60%

Conservative Scenario

Technical and ethical barriers creating significant implementation challenges

Key Drivers: Restrictive regulations, technical limitations, implementation challenges, and risk-averse organizational cultures.

Probability: 15-20%

Scenario Comparison Matrix

| Factor | Optimistic | Base Case | Conservative |

|---|---|---|---|

| Implementation Timeline | Accelerated | Steady | Delayed |

| Market Adoption | Widespread | Selective | Limited |

| Technology Evolution | Rapid | Progressive | Incremental |

| Regulatory Environment | Supportive | Balanced | Restrictive |

| Business Impact | Transformative | Significant | Modest |

Transformational Impact

Redefinition of knowledge work, automation of creative processes. This evolution will necessitate significant changes in organizational structures, talent development, and strategic planning processes.

The convergence of multiple technological trends—including artificial intelligence, quantum computing, and ubiquitous connectivity—will create both unprecedented security challenges and innovative defensive capabilities.

Implementation Challenges

Ethical concerns, computing resource limitations, talent shortages. Organizations will need to develop comprehensive change management strategies to successfully navigate these transitions.

Regulatory uncertainty, particularly around emerging technologies like AI in security applications, will require flexible security architectures that can adapt to evolving compliance requirements.

Key Innovations to Watch

Multimodal learning, resource-efficient AI, transparent decision systems. Organizations should monitor these developments closely to maintain competitive advantages and effective security postures.

Strategic investments in research partnerships, technology pilots, and talent development will position forward-thinking organizations to leverage these innovations early in their development cycle.

Technical Glossary

Key technical terms and definitions to help understand the technologies discussed in this article.

Understanding the following technical concepts is essential for grasping the full implications of the security threats and defensive measures discussed in this article. These definitions provide context for both technical and non-technical readers.

platform intermediate

embeddings intermediate

API beginner

How APIs enable communication between different software systems

How APIs enable communication between different software systems