Why Chip Performance Gains Now Depend More on Design Than Raw Power

For decades, the semiconductor industry followed a simple and predictable rule: more transistors, higher clock speeds, and greater power consumption produced faster chips. Performance gains were visible, measurable, and largely linear. That era is over. In 2026, the most meaningful performance improvements no longer come from raw power alone, but from how chips are designed, structured, and optimized.

The shift from brute-force scaling to design-led performance is one of the most important changes in modern computing. It affects everything from consumer devices and gaming hardware to data centers and artificial intelligence infrastructure. Understanding this transition explains why newer chips often outperform older ones without dramatic increases in clock speed or power draw.

The Limits of Raw Power Are Now Structural

Raising clock speeds and power budgets used to be the easiest way to improve performance. Today, those approaches face hard limits.

Higher power leads to:

- Exponential heat generation

- Reduced efficiency

- Thermal throttling

- Shorter component lifespan

Modern chips already operate close to their thermal and electrical limits. Simply pushing more power through silicon no longer produces proportional gains. In many cases, it reduces real-world performance by triggering throttling and inefficiency.

As a result, raw power has become a diminishing return rather than a primary growth driver.

Post–Moore’s Law Computing Has Changed the Rules

Moore’s Law once ensured predictable performance improvements through transistor scaling. As scaling slows, the industry has been forced to find performance elsewhere.

That “elsewhere” is design.

Performance gains now come from:

- Architectural efficiency

- Parallelism

- Data locality

- Smarter scheduling

- Specialized accelerators

Design choices now determine how effectively transistors are used—not just how many exist.

Architecture Matters More Than Frequency

Two chips with identical clock speeds can deliver vastly different performance depending on architecture. Instruction pipelines, execution width, branch prediction, cache hierarchy, and scheduling logic all influence how much work gets done per cycle.

Modern architectures focus on:

- Higher instructions per clock (IPC)

- Reduced stalls and latency

- Better utilization of execution units

This is why newer processors often outperform older ones at lower frequencies. They are not faster because they run harder—but because they run smarter.

Performance per Watt Has Become the Primary Metric

In modern chip design, performance per watt is often more important than peak performance.

This shift is driven by:

- Data center energy costs

- Laptop battery life expectations

- Thermal constraints

- Environmental pressure

A chip that delivers 90% of peak performance at 60% of the power is more valuable than one that achieves marginally higher performance at double the energy cost.

Design optimizations that reduce wasted work and unnecessary power consumption now deliver the most meaningful gains.

Cache Design and Data Movement Define Speed

Modern processors spend more time waiting for data than executing instructions. As a result, memory hierarchy design has become central to performance.

Advanced chip designs prioritize:

- Larger and smarter caches

- Faster cache-to-core communication

- Reduced memory latency

- Improved data locality

In many workloads, improving data movement efficiency produces larger gains than increasing compute power.

Specialization Beats Generalization

General-purpose CPUs are no longer expected to handle every task efficiently. Instead, modern systems rely on heterogeneous designs.

These include:

- AI accelerators

- Media engines

- Security processors

- Graphics and compute units

By offloading specific tasks to specialized hardware, systems achieve dramatic performance improvements without increasing raw CPU power.

This design-led specialization is one of the biggest reasons performance continues to grow despite slowing transistor scaling.

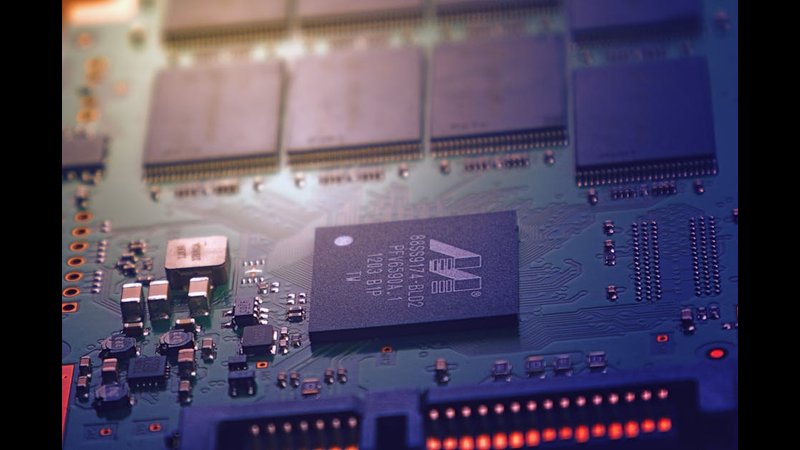

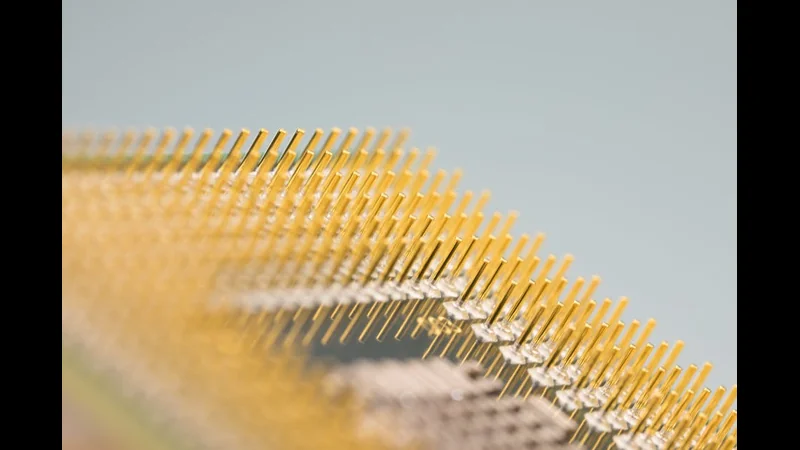

Chiplets and Modular Design Change Everything

Monolithic chip design is increasingly inefficient at advanced nodes. Chiplet-based architectures allow designers to mix and match components built on different process technologies.

Design advantages include:

- Better manufacturing yields

- Flexible scaling

- Optimized cost-performance balance

- Faster innovation cycles

- Chip performance gains now depend heavily on how these chiplets are connected

- synchronized

- managed—factors rooted entirely in design

- not raw power.

AI Has Redefined What “Performance” Means

Artificial intelligence workloads have reshaped chip priorities. Peak clock speed matters far less than throughput, efficiency, and parallelism.

AI-driven design focuses on:

- Matrix operations

- Parallel data paths

- Specialized instruction sets

- Memory bandwidth optimization

Chips optimized for AI demonstrate how intelligent design can outperform brute-force power by orders of magnitude in targeted workloads.

Software–Hardware Co-Design Is Now Essential

Performance gains increasingly come from designing hardware alongside software, not in isolation.

This approach enables:

- Workload-aware architectures

- Compiler-driven optimization

- Predictable performance behavior

- Reduced overhead

When hardware and software are designed together, efficiency improves without increasing power or frequency.

Why Consumers See Slower “Spec Jumps”

Many users perceive slower progress because headline specs no longer double every generation. However, real-world performance continues to improve—just in less obvious ways.

Design-led gains often show up as:

- Better sustained performance

- Lower power consumption

- Quieter operation

- Improved multitasking

- Faster real-world workloads

The gains are real, but they are no longer captured by a single number.

The Future of Chip Performance

Future performance gains will depend on:

- Smarter architectures

- Better integration between components

- Advanced packaging

- Software-aware design

- Efficiency-focused optimization

Raw power will still matter—but only as one variable in a much larger design equation.

Conclusion

Chip performance gains now depend more on design than raw power because the industry has reached the physical and economic limits of brute-force scaling. In this new era, architectural intelligence, efficiency, specialization, and data movement define speed far more than clock frequency or wattage.

The most powerful chips of the future will not be the ones that consume the most energy—but the ones that use it most intelligently.